Mistral

Portkey helps bring Mistral's APIs to production with its observability suite & AI Gateway. Use the Mistral API through Portkey for:

Enhanced Logging: Track API usage with detailed insights and custom segmentation.

Production Reliability: Automated fallbacks, load balancing, retries, time outs, and caching.

Continuous Improvement: Collect and apply user feedback.

1.1 Setup & Logging

Obtain your Portkey API Key.

Set

$ export PORTKEY_API_KEY=PORTKEY_API_KEYSet

$ export MISTRAL_API_KEY=MISTRAL_API_KEYpip install portkey-aiornpm i portkey-ai

""" OPENAI PYTHON SDK """

from portkey_ai import Portkey

portkey = Portkey(

api_key="PORTKEY_API_KEY",

# ************************************

provider="mistral-ai",

Authorization="Bearer MISTRAL_API_KEY"

# ************************************

)

response = portkey.chat.completions.create(

model="mistral-tiny",

messages = [{ "role": "user", "content": "c'est la vie" }]

)import Portkey from 'portkey-ai';

const portkey = new Portkey({

apiKey: "PORTKEY_API_KEY",

// ***********************************

provider: "mistral-ai",

Authorization: "Bearer MISTRAL_API_KEH"

// ***********************************

})

async function main(){

const response = await portkey.chat.completions.create({

model: "mistral-tiny",

messages: [{ role: 'user', content: "c'est la vie" }]

});

}

main()1.2. Enhanced Observability

Trace requests with single id.

Append custom tags for request segmenting & in-depth analysis.

Just add their relevant headers to your request:

from portkey_ai import Portkey

portkey = Portkey(

api_key="PORTKEY_API_KEY",

provider="mistral-ai",

Authorization="Bearer MISTRAL_API_KEY"

)

response = portkey.with_options(

# ************************************

trace_id="ux5a7",

metadata={"user": "john_doe"}

# ************************************

).chat.completions.create(

model="mistral-tiny",

messages = [{ "role": "user", "content": "c'est la vie" }]

)import Portkey from 'portkey-ai';

const portkey = new Portkey({

apiKey: "PORTKEY_API_KEY",

provider: "mistral-ai",

Authorization: "Bearer MISTRAL_API_KEH"

})

async function main(){

const response = await portkey.chat.completions.create({

model: "mistral-tiny",

messages: [{ role: 'user', content: "c'est la vie" }]

},{

// ***********************************

traceID: "ux5a7",

metadata: {"user": "john_doe"}

});

}

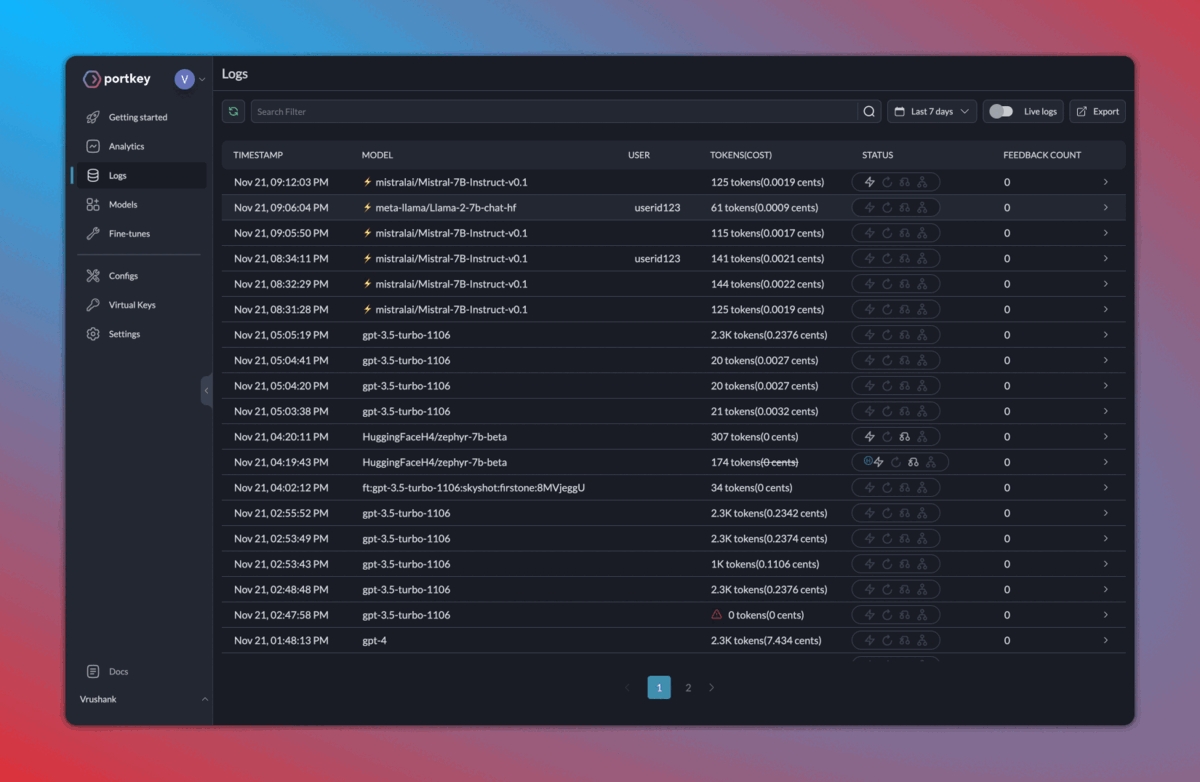

main()Here’s how your logs will appear on your Portkey dashboard:

2. Caching, Fallbacks, Load Balancing

Fallbacks: Ensure your application remains functional even if a primary service fails.

Load Balancing: Efficiently distribute incoming requests among multiple models.

Semantic Caching: Reduce costs and latency by intelligently caching results.

Toggle these features by saving Configs (from the Portkey dashboard > Configs tab).

If we want to enable semantic caching + fallback from Mistral-Medium to Mistral-Tiny, your Portkey config would look like this:

{

"cache": {"mode": "semantic"},

"strategy": {"mode": "fallback"},

"targets": [

{

"provider": "mistral-ai", "api_key": "...",

"override_params": {"model": "mistral-medium"}

},

{

"provider": "mistral-ai", "api_key": "...",

"override_params": {"model": "mistral-tiny"}

}

]

}Now, just set the Config ID while instantiating Portkey:

""" OPENAI PYTHON SDK """

from portkey_ai import Portkey

portkey = Portkey(

api_key="PORTKEY_API_KEY",

# ************************************

config="pp-mistral-cache-xx"

# ************************************

)

response = portkey.chat.completions.create(

model="mistral-tiny",

messages = [{ "role": "user", "content": "c'est la vie" }]

)import Portkey from 'portkey-ai';

const portkey = new Portkey({

apiKey: "PORTKEY_API_KEY",

// ***********************************

config: "pp-mistral-cache-xx"

// ***********************************

})

async function main(){

const response = await portkey.chat.completions.create({

model: "mistral-tiny",

messages: [{ role: 'user', content: "c'est la vie" }]

});

}

main()For more on Configs and other gateway feature like Load Balancing, check out the docs.

3. Collect Feedback

Gather weighted feedback from users and improve your app:

from portkey import Portkey

portkey = Portkey(

api_key="PORTKEY_API_KEY"

)

def send_feedback():

portkey.feedback.create(

'trace_id'= 'REQUEST_TRACE_ID',

'value'= 0 # For thumbs down

)

send_feedback()import Portkey from 'portkey-ai';

const portkey = new Portkey({

apiKey: "PORTKEY_API_KEY"

});

const sendFeedback = async () => {

await portkey.feedback.create({

traceID: "REQUEST_TRACE_ID",

value: 1 // For thumbs up

});

}

await sendFeedback();Conclusion

Integrating Portkey with Mistral helps you build resilient LLM apps from the get-go. With features like semantic caching, observability, load balancing, feedback, and fallbacks, you can ensure optimal performance and continuous improvement.

Read full Portkey docs here. | Reach out to the Portkey team.

Last updated

Was this helpful?